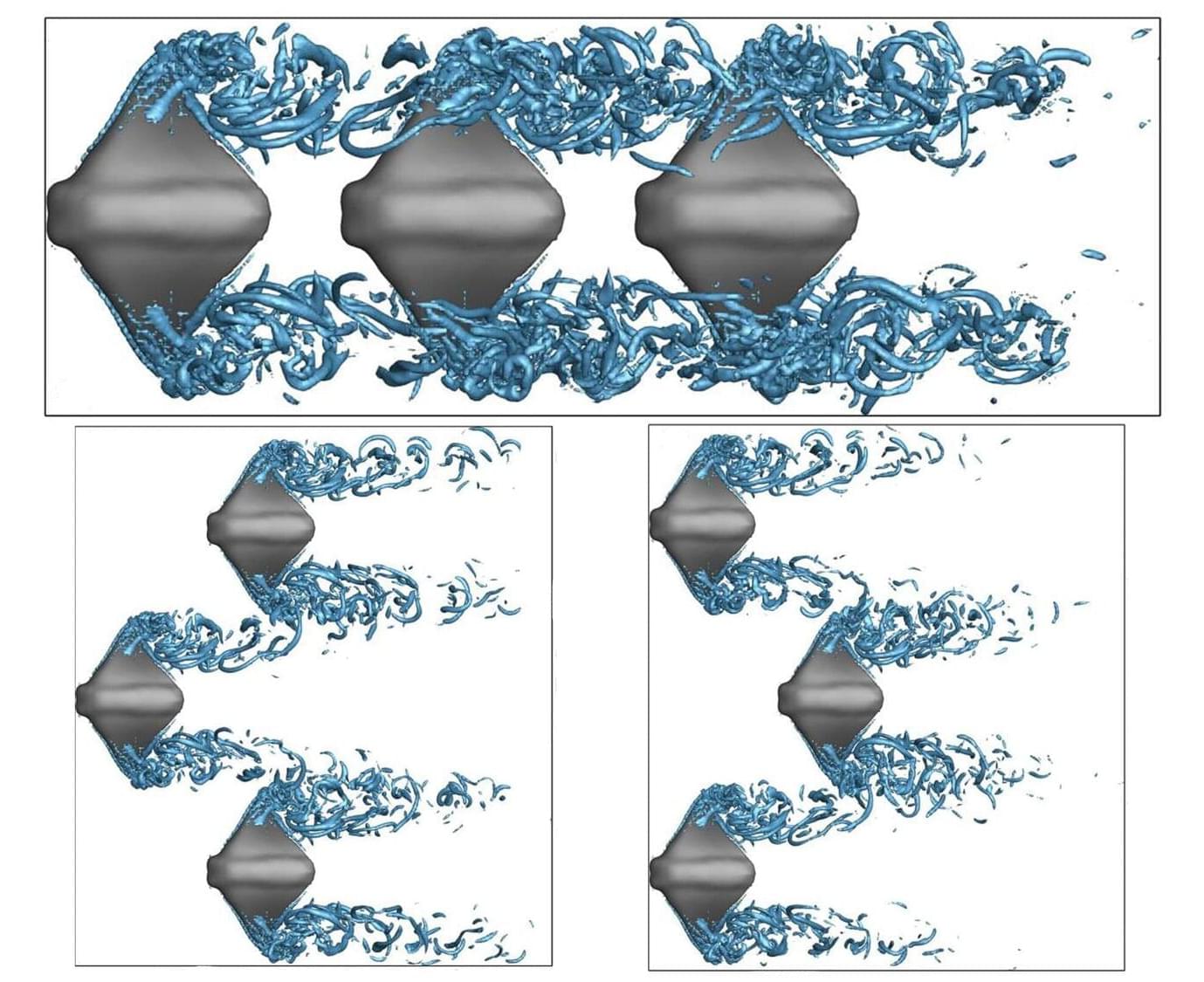

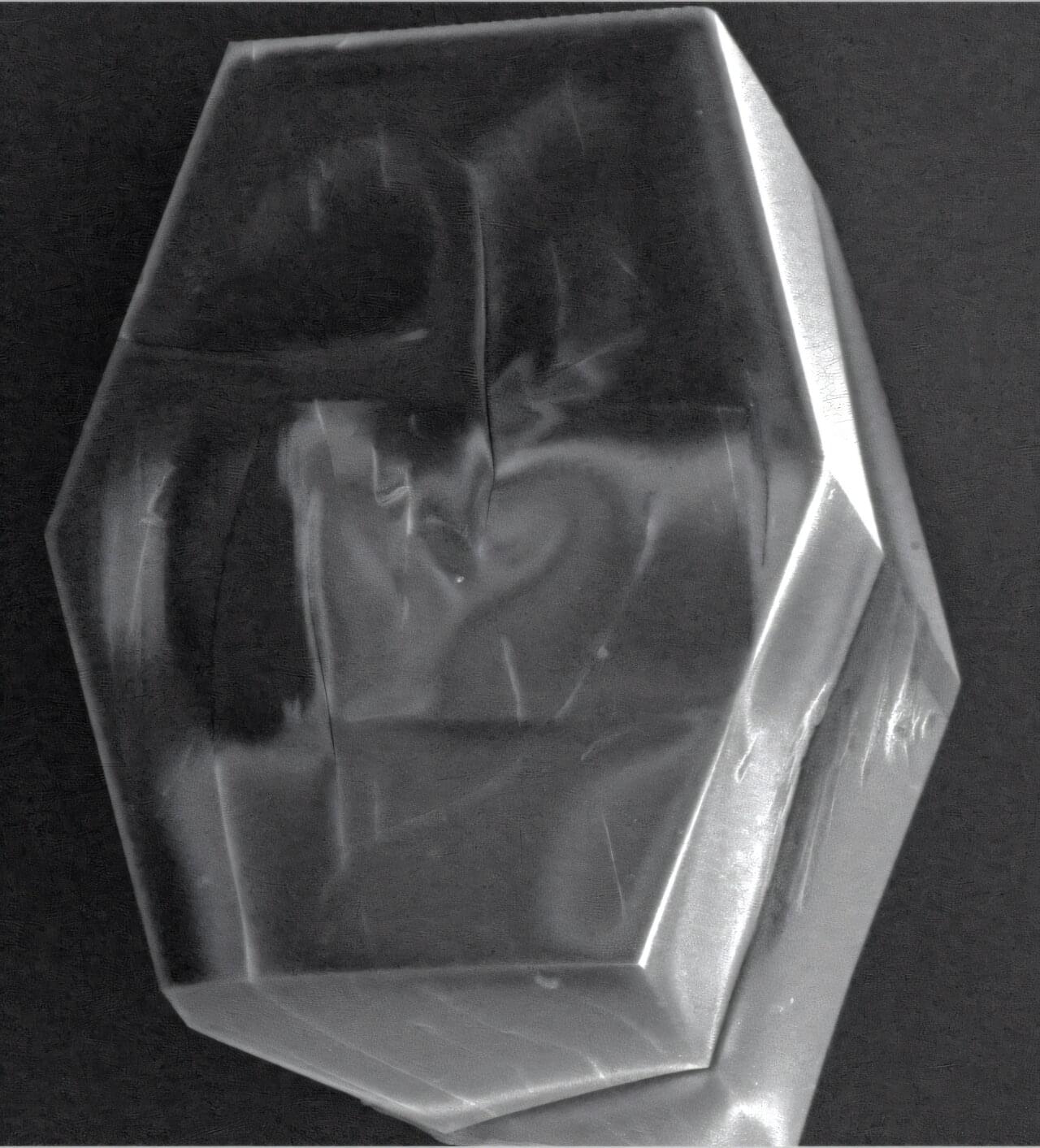

From bird flocking to fish schooling, many biological systems exhibit some type of collective motion, often to improve performance and conserve energy. Compared to other swimmers, manta rays are particularly efficient, and their large aspect ratio is useful for creating large lift compared to drag. These properties make their collective motion especially relevant to complex underwater operations.

To understand how their group dynamics affect their propulsion, researchers from Northwestern Polytechnical University (NPU) and the Ningbo Institute of NPU, in China, modeled the motions of groups of manta rays, which they present in Physics of Fluids.

“As underwater operation tasks become more complex and often require multiple underwater vehicles to carry out group operations, it is necessary to take inspiration from the group swimming of organisms to guide formations of underwater vehicles,” said author Pengcheng Gao. “Both the shape of manta rays and their propulsive performance are of great value for biomimicry.”