When the team posted their proof in August, many mathematicians were excited. It was the biggest advance in the classification project in decades, and hinted at a new way to tackle the classification of polynomial equations well beyond four-folds.

But other mathematicians weren’t so sure. Six years had passed since the lecture in Moscow. Had Kontsevich finally made good on his promise, or were there still details to fill in?

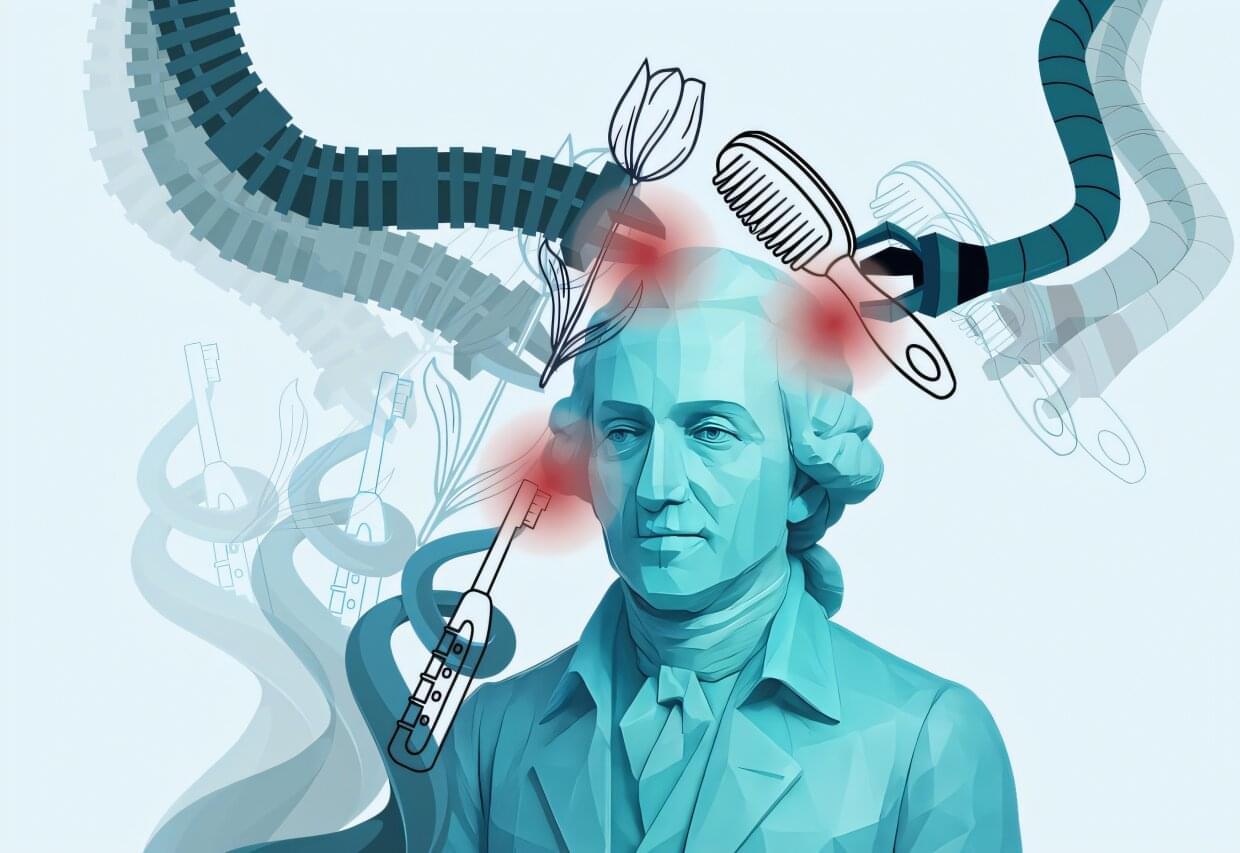

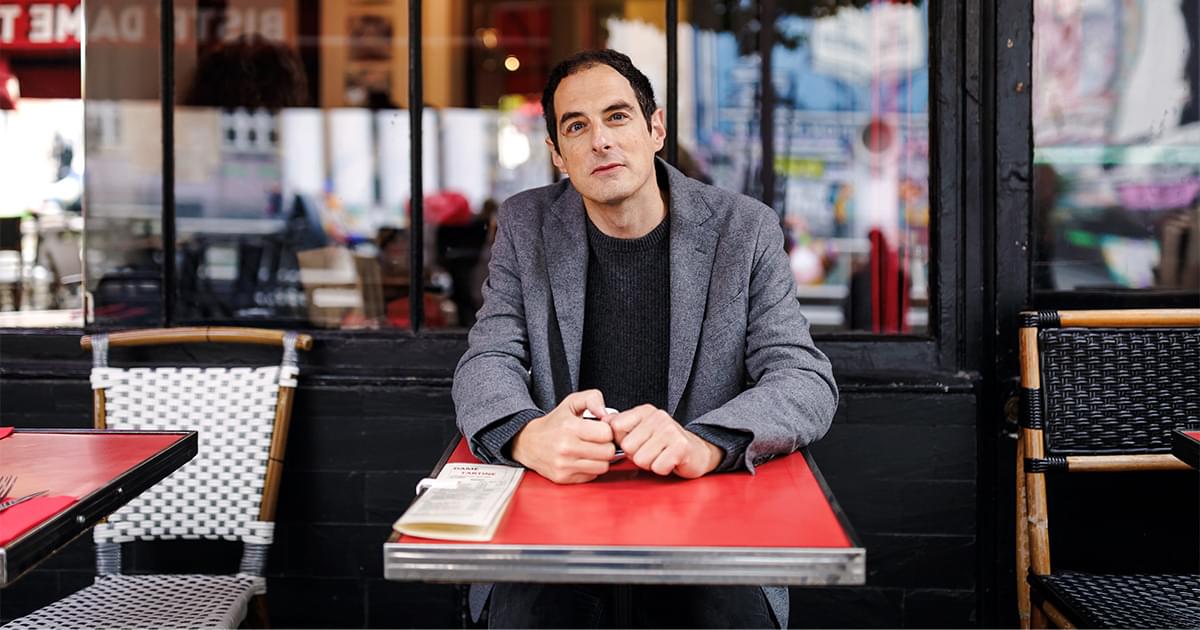

And how could they assuage their doubts, when the proof’s techniques were so completely foreign — the stuff of string theory, not polynomial classification? “They say, ‘This is black magic, what is this machinery?’” Kontsevich said.