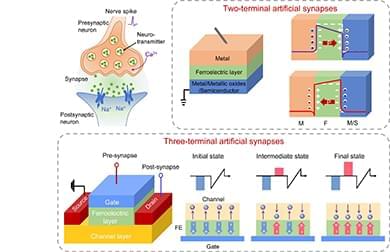

Neuromorphic computing provides alternative hardware architectures with high computational efficiencies and low energy consumption by simulating the working principles of the brain with artificial neurons and synapses as building blocks. This process helps overcome the insurmountable speed barrier and high power consumption from conventional von Neumann computer architectures. Among the emerging neuromorphic electronic devices, ferroelectric-based artificial synapses have attracted extensive interest for their good controllability, deterministic resistance switching, large output signal dynamic range, and excellent retention. This Perspective briefly reviews the recent progress of two-and three-terminal ferroelectric artificial synapses represented by ferroelectric tunnel junctions and ferroelectric field effect transistors, respectively. The structure and operational mechanism of the devices are described, and existing issues inhibiting high-performance synaptic devices and corresponding solutions are discussed, including the linearity and symmetry of synaptic weight updates, power consumption, and device miniaturization. Functions required for advanced neuromorphic systems, such as multimodal and multi-timescale synaptic plasticity, are also summarized. Finally, the remaining challenges in ferroelectric synapses and possible countermeasures are outlined.