Imagine the tiniest game of checkers in the world—one played by using lasers to precisely shuffle around ions across a very small grid.

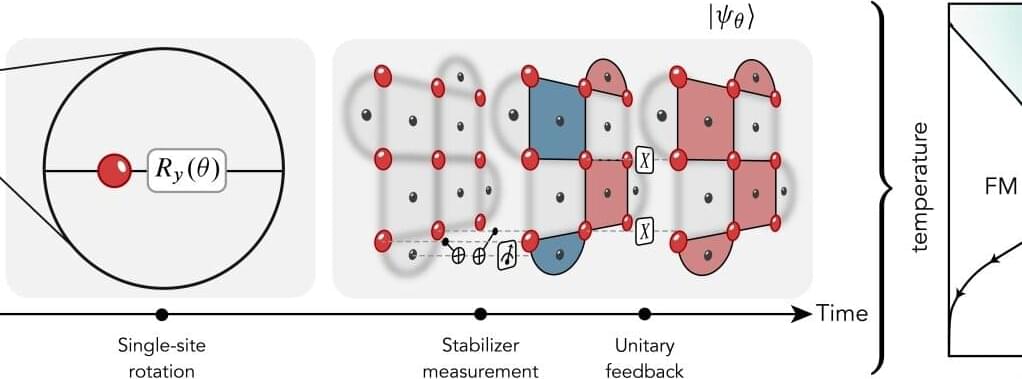

That’s the idea behind a recent study published in the journal Physical Review Letters. A team of theoretical physicists from Colorado has designed a new type of quantum “game” that scientists can play on a real quantum computer—or a device that manipulates small objects, such as atoms, to perform calculations.

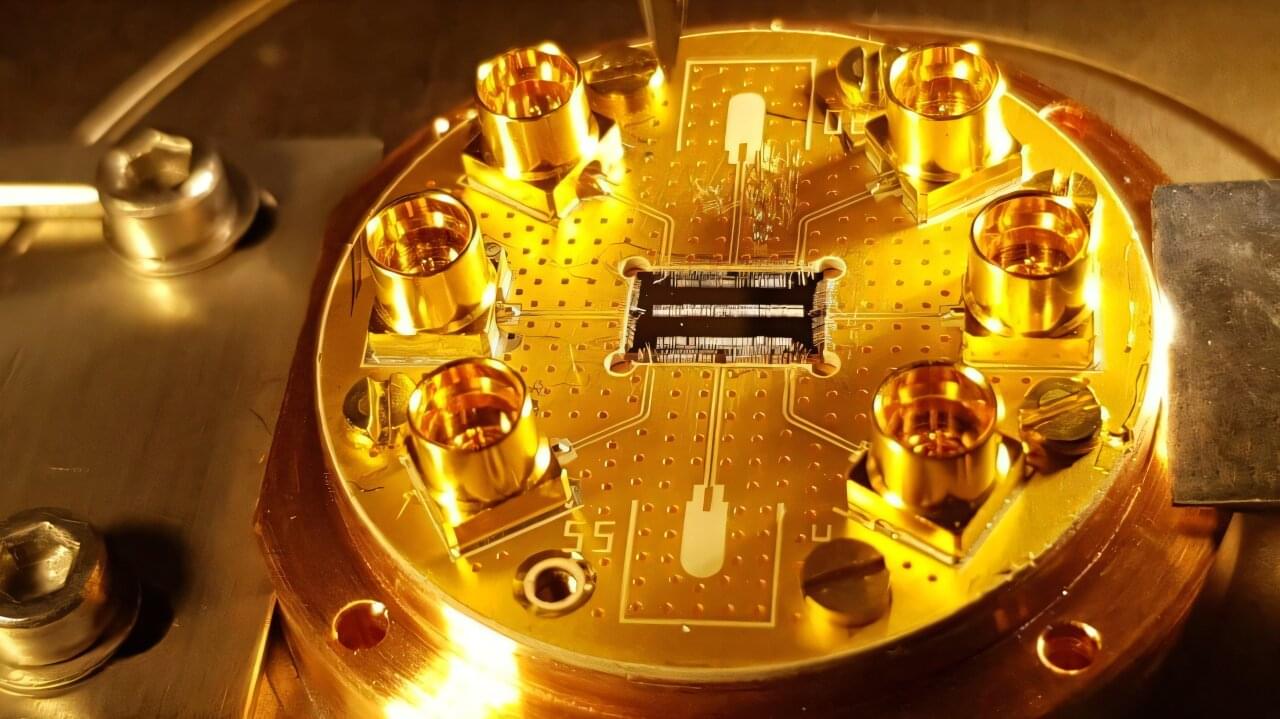

The researchers even tested their game out on one such device, the Quantinuum System Model H1 Quantum Computer developed by the company Quantinuum. The study is a collaboration between scientists at the University of Colorado Boulder and Quantinuum, which is based in Broomfield, Colorado.