📸 Look at this post on Facebook https://www.facebook.com/share/U5sBEHBUhndiJJDz/?mibextid=xfxF2i

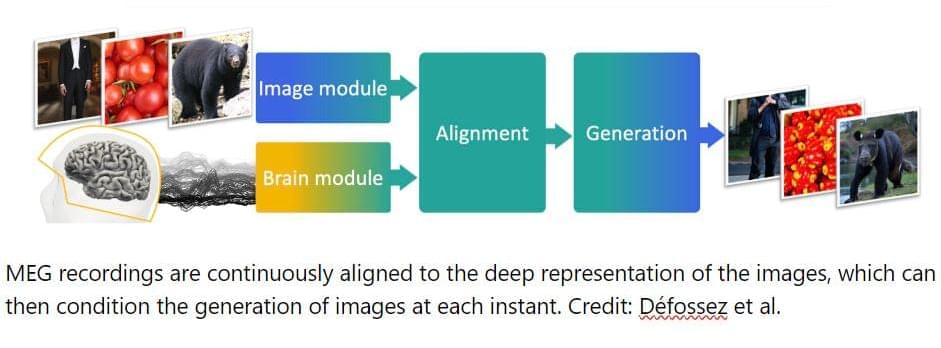

In the realm of computing technology, there is nothing quite as powerful and complex as the human brain. With its 86 billion neurons and up to a quadrillion synapses, the brain has unparalleled capabilities for processing information. Unlike traditional computing devices with physically separated units, the brain’s efficiency lies in its ability to serve as both a processor and memory device. Recognizing the potential of harnessing the brain’s power, researchers have been striving to create more brain-like computing systems.

Efforts to mimic the brain’s activity in artificial systems have been ongoing, but progress has been limited. Even one of the most powerful supercomputers in the world, Riken’s K Computer, struggled to simulate just a fraction of the brain’s activity. With its 82,944 processors and a petabyte of main memory, it took 40 minutes to simulate just one second of the activity of 1.73 billion neurons connected by 10.4 trillion synapses. This represented only one to two percent of the brain’s capacity.

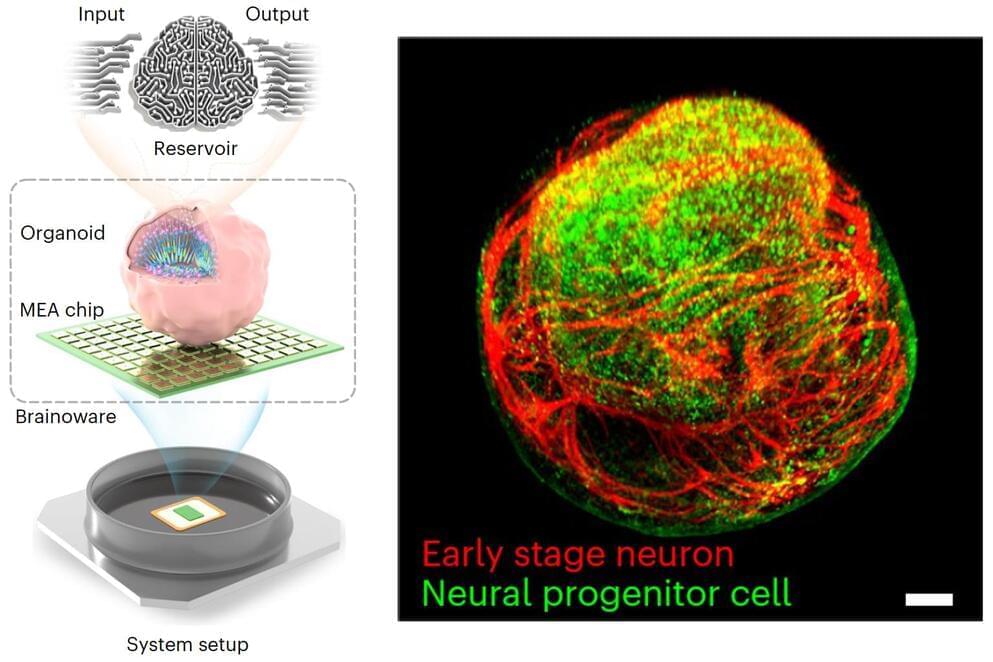

In recent years, scientists and engineers have delved into the realm of neuromorphic computing, which aims to replicate the brain’s structure and functionality. By designing hardware and algorithms that mimic the brain, researchers hope to overcome the limitations of traditional computing and improve energy efficiency. However, despite significant progress, neuromorphic computing still poses challenges, such as high energy consumption and time-consuming training of artificial neural networks.