Can robotic exoskeletons help kids with cerebral palsy walk?

Category: cyborgs – Page 77

Star Wars Prosthetic Arm

This ‘Star Wars’-inspired prosthetic arm gives amputees the ability to feel again.

Technological Singularity — Artificial Intelligence (AI)

Human intelligence is not linear. Machine intelligence can be summed up in three words; efficiency, efficacy and trade off. The more we automate human thinking, the less we need humans. Get it?

From the subtle advancements in technology to the birth of SKYNET!!!! Join us as we explore facts about the Technological Singularity.

11. What is the Technological Singularity?

What’s that? You don’t know what it is? No worries, it is a pretty scientific term.

To quote Wikipedia, the Technological Singularity, “is a hypothetical future point in time at which technological growth becomes uncontrollable and irreversible, resulting in unfathomable changes to human civilization.“

What’s more, this is NOT a new theory or idea. And it honestly wasn’t proposed by various sci-fi movies. In fact, it was proposed by a book in 1993 via Vernor Vinge in The Coming Technological Singularity. What’s more, while this may seem like a “sci-fi future”, there are many who actually believe that not only will this come, but it could come to bear as soon as 2050.

10. Where Are We Now In The Technological Singularity?

To fully understand how the Technological Singularity could happen, we need to understand where we are as a society that could lead us to the Technological Singularity future that many fear.

9. Intelligence Boom

The key word here to note is “IntelligenceBoom”. No, I don’t mean like our own brains exploding (that would be bad…), but rather, an boom of potential via Artificial Intelligence. This is one of the potential “outcomes” of a Technological Singularity.

Think of it like this. Every generation of computer we make is technically better than the next, right? The difference between what we do and what an Intelligence Boom is, is that the A.I. is the one “making” the next generation. That’s a scary thought, huh? And that’s actually a reason why many are opposed to the research on super-intelligent (and always evolving) A.I’s. This included the late Stephen Hawking and current eccentric Billionaire Elon Musk. They feel that humanity will be doomed because of A.I’s. Whether it be through Intelligence Boom, or something of our own making.

8. Making A “Better Tomorrow“

There is another way that many dispute the Technological Singularity will come via A.I. and that’s simply by creating an A.I. ourselves that goes far beyond what we intended it to be. Which may not be as far-fetched as you might think.

If I were to say the names Alexa, Siri, and Watson, you’d recognize them as various machines with various intelligence, right? Well technically, they’re all A.I., just with different levels of intelligence. Siri came first and could react to certain things on your iPad or iPhone. Some think that we are very close to that point. Including a man named Ray Kurzweil, who believes that we could be at the Singularity point by 2045 at the earliest.

7. The Predictions Of Ray Kurzweil Part 1

If you’re not familiar with ray kurzweil, you honestly should read up on him, he’s not just another guy predicting the end of civilization, he’s actually an engineer at Google, and sees himself as a Futurist. One who has made predictions in the past about technologies advances with accuracy.

6. Robotics

When you think of the “future” that humanity “wants” and that various sci-fi and movies have “predicted”, the obvious things you see are robots and people with robotic appendages. Let’s look at robots first. The Technological Singularity notes that as robots get more advanced, humans will become less and less important. All part of the “A.I. Overlord” scenarios if you will. Then again, WE could be the robots, not unlike another robotic race with brilliant intelligence: The Borg.

5. Artificial Limbs and Cyborgs

One of the biggest and most worrying things about a person in regards to their life is the chance that they could lose a limb. The loss of a limb is something that cannot be overcome simply.

4. The Predictions Of Ray Kurzweil Part 2 ( ray kurzweil 2019)

But again, the question becomes, “How far are we from that future?” If Ray Kurzweil is to be believed, not as far as you think. For he believes a key part of the singularity will come in 2029, a mere decade in the future.

3. 2049

By 2049, humanity has become so intertwined with technology that it’s hard to tell what’s real, and what’s not. For example, there are machines in this time period according to Kurzweil that can literally make just about anything the user wants. They’re called Foglets, and they literally are around every person on Earth. They can make food so good you’ll swear it’s “natural” even though it’s not.

2. Skynet

Yep, that’s the “nuclear option”. The notion that we as humans make a technology or intelligence so powerful that it realizes one day that humanity…is actually inferior to it. To which, it’ll go to extreme lengths in order to make sure that humanity is wiped off the face of the Earth.

1. Will The Technological Singularity Happen?

That is the question, isn’t it? is Judgment Day inevitable?

Transhumanism and Immortality

I am in shock… Google suddenly as yahoo are allowing conjecture and mendacity be seen as public or scientific opinion. Here is another confused mind who towards the end of her rant quotes Christian scripture as basis to stop Life extension-Transhumanism???

When I say to these minds Behold the leader of Christianity stood for Life abundant-Super Longevity and I can prove such. No matter what lost evangelist or preacher tells you Jesus was a medical researcher of extraordinary magnitude…

NOW BEHOLD THE LOST in this article… https://www.rodofironministries.com/…/transhumanism-and-imm… Respect r.p.berry & AEWR wherein aging now ends we have found the many causes of aging and we have located an expensive cure. We search for partners-investors to now join us in agings end… gerevivify.blogspot.com/

Whether we like it or not, the hybrid age is already here. From genetic manipulation, to AI technology, to nano-technology, robotics, 3D printing, brain mapping, super computing, the list is literally endless. Futurists and Transhumanist philosophers believe that science and technology are limitless, and that humanity’s current cultural traditions and mindset are the mechanisms in place that prohibit human development.

Mankind has experienced formidable technological growth in the past from the early ancestors to the Agrarian Age, the Industrial Age in the 18th century, and the Information Age in the 1970s, from their point of view, we are simply going through another revolutionary leap into what they call, the Hybrid Age.

The Transhumanist Movement, also known as H+ is an intellectual, cultural and political movement that supports technological enhancements in the human body through the use of genetics, robotics, synthetic biology, AI technology among others to modify the physiology, psyche, memory and progeny of a human being and ultimately achieve immortality on earth, (a procedure they believe can be reached within the next decade) whereby human consciousness can be uploaded into a robot, cyborg or possibly, a human clone. The sci-fi novel “Altered Carbon” by Richard K. Morgan captures a cornucopia of technological concepts that are, believe it or not, in experimental stages across the globe, methods such as: human hybridization, CRISPR-technology—Chinese scientists have used CRISPR for gene-editing on 86 human patients; limb regeneration, bionic augmentation, the making of super-soldiers, cloning, Cryonics and the growing interest in information-theoretic death, neuropreservation, suspended animation, molecular nano-technology and so on. Sounds far-fetched right?

Bionic neurons could enable implants to restore failing brain circuits

Scientists say creation could be used to circumvent nerve damage and help paralysed people regain movement.

Ian Sample Science editor.

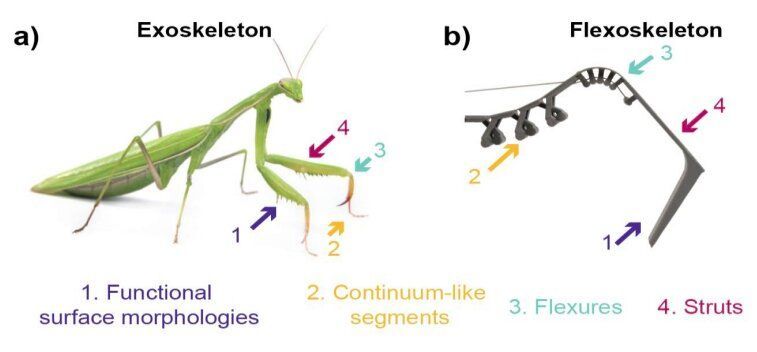

Flexoskeleton printing: Fabricating flexible exoskeletons for insect-inspired robots

Insects typically have a variety of complex exoskeleton structures, which support them in their movements and everyday activities. Fabricating artificial exoskeletons for insect-inspired robots that match the complexity of these naturally-occurring structures is a key challenge in the field of robotics.

Although researchers have proposed several fabrication processes and techniques to produce exoskeletons for insect-inspired robots, many of these methods are extremely complex or rely on expensive equipment and materials. This makes them unfeasible and difficult to apply on a wider scale.

With this in mind, researchers at the University of California in San Diego have recently developed a new process to design and fabricate components for insect-inspired robots with exoskeleton structures. They introduced this process, called flexoskeleton printing, in a paper prepublished on arXiv.

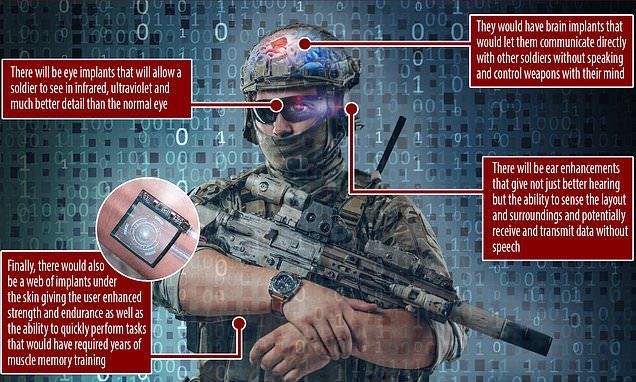

US Military scientists create plan for future ‘cyborg super soldier’

Future armies could be made up of half-human half-machine cyborgs with infrared sight, ultrasonic hearing and super strength, equipped with mind-controlled weapons.

In a US Army report, experts from Devcom — the Combat Capabilities Development Command — outlined a number of possible future technologies that could be used to enhance soldiers on the battlefield by 2050.