Heavier users of Twitter are more positive about Elon Musk’s X rebranding, according to a new poll from CivicScience.

According to a person with direct knowledge of the matter, representatives from Tesla are planning to meet India’s commerce minister this month to discuss the possibility of constructing a factory for producing an all-new $24,000 electric car. Tesla has expressed interest in manufacturing low-cost electric vehicles for both the local Indian market and exports. This meeting would mark the most significant discussions between Tesla and the Indian government since Elon Musk’s meeting with Prime Minister Narendra Modi in June, where he expressed his intention to make a substantial investment in the country.

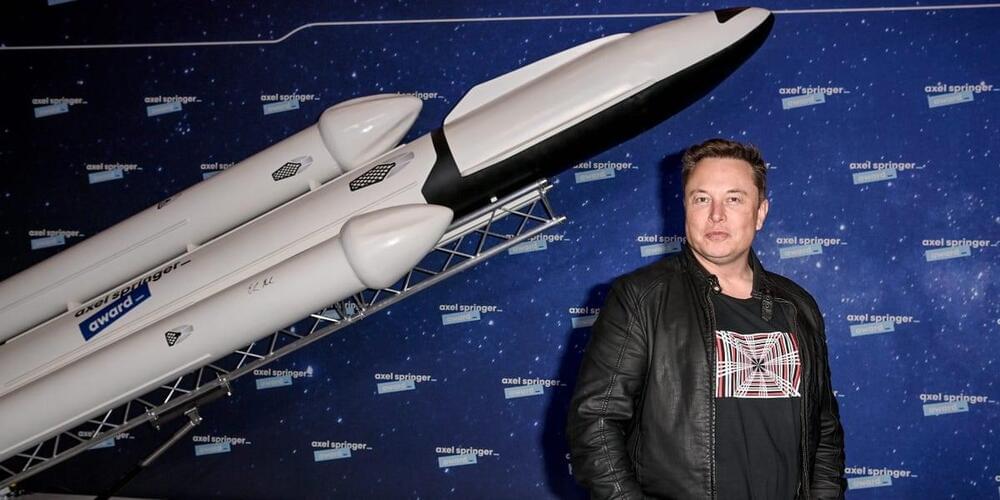

Elon Musk recently stated SpaceX made ‘well over a thousand changes’ to Starship since its debut flight.

SpaceX continues to prepare for the second orbital launch attempt of Starship despite concerns over a potential delay caused by the ongoing environmental lawsuit against the Federal Aviation Authority (FAA).

Elon Musk’s private space company, likely eager to show that preparations continue uninterrupted, has shared a number of images on Twitter of Booster 9, the Super Heavy prototype that will be used for the massive rocket’s second test flight.

Elon Musk announced plans on Saturday to ditch Twitter’s bird logo for an “X” — a reference to the CEO’s vision to create an all-encompassing “everything app” that may incorporate shopping and banking services, among other features.

The domain X.com now redirects users to Twitter. Musk said a new “interim” X logo will go live Sunday. He did not respond to a request for comment.

There are now over 1.9 million orders for the long-awaited Tesla Cybertruck, per a crowd-sourced data tracker. Speaking on an Earnings Call earlier this week, Tesla CEO Elon Musk stated that demand for the Cybertruck is “so off the hook, you can’t even see the hook.”

Given that Tesla plans to produce 375,000 Cybertrucks a year at peak capacity, new orders will technically take around 5 years to arrive. That said, a significant amount of reservation holders may not follow through with their purchase — after all, the deposit to reserve a Cybertruck was only $100. The Cybertruck is being produced at Giga Texas, although it’s a possibility it could also be built at Giga Mexico when the proposed factory is up and running in a few years’ time.

It will be interesting to see if the Cybertruck will be offered outside of North America. Currently, those in Tesla’s European and Asian markets can pre-order the truck. That said, the Cybertruck’s large size and hefty weight could make selling it overseas a serious challenge. For example, in several European nations it would have to be classed as a commercial truck or semi.

The visionary CEO says his companies could one day offer hope to amputees by giving them a prosthetic limb that could one day be better than a biological one.

Elon Musk, the omnipotent ruler of the Twitterverse, has chimed in and has decreed that the actual physical universe is “possibly” twice as old as we think it is.

Make of that what you will.

Musk was responding to noted misinformation peddler and comedian Joe Rogan, who linked to a press release about a controversial new paper that indeed suggests the universe could be 26.7 billion years old, almost twice as the general consensus among scientists.