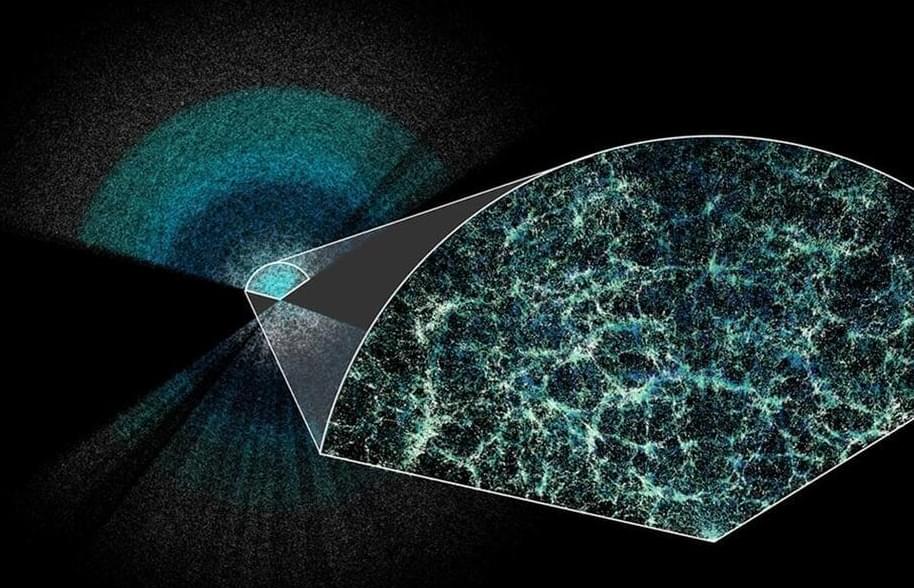

Can an exoplanet’s weather be mapped similar to weather on Earth and even some of the gas giants in our solar system? This is what a recent study published in Nature Astronomy hopes to address as a team of international researchers used NASA’s James Webb Space Telescope to investigated weather patterns on WASP-43 b, which is a “hot Jupiter” gas giant exoplanet located approximately 280 light-years from Earth. This study holds the potential to help astronomers develop new methods and techniques in conducting atmospheric science on planetary bodies light years from Earth.

Discovered in 2011 by the La Silla Observatory in Chile using the transit method, WASP-43 b has a radius just slightly larger than Jupiter and whose mass is slightly more than double an orbital period of 0.8 days. Because of this extremely close distance, WASP-43 b is tidally locked to its parent star, meaning one side always faces it while the opposite side always faces away from it. In 2014, NASA’s Hubble Space Telescope conducted its own weather mapping of WASP-43 b, discovering its atmosphere reflects only small amounts of sunlight, which is in stark contrast to the Earth, along with discovering the presence of water vapor. Additionally, WASP-43 b was also observed by the now-retired NASA Spitzer Space Telescope.

“With Hubble, we could clearly see that there is water vapor on the dayside. Both Hubble and Spitzer suggested there might be clouds on the nightside,” said Dr. Taylor Bell, who is a researcher from the Bay Area Environmental Research Institute and lead author of the study. “But we needed more precise measurements from Webb to really begin mapping the temperature, cloud cover, winds, and more detailed atmospheric composition all the way around the planet.”