For decades, mathematicians have struggled to understand matrices that reflect both order and randomness, like those that model semiconductors. A new method could change that.

While conventional computers store information in the form of bits, fundamental pieces of logic that take a value of either 0 or 1, quantum computers are based on qubits. These can have a state that is simultaneously both 0 and 1. This odd property, a quirk of quantum physics known as superposition, lies at the heart of quantum computing’s promise to ultimately solve problems that are intractable for classical computers.

Many existing quantum computers are based on superconducting electronic systems in which electrons flow without resistance at extremely low temperatures. In these systems, the quantum mechanical nature of electrons flowing through carefully designed resonators creates superconducting qubits.

These qubits are excellent at quickly performing the logical operations needed for computing. However, storing information—in this case quantum states, mathematical descriptors of particular quantum systems—is not their strong suit. Quantum engineers have been seeking a way to boost the storage times of quantum states by constructing so-called “quantum memories” for superconducting qubits.

How can the behavior of elementary particles and the structure of the entire universe be described using the same mathematical concepts? This question is at the heart of recent work by the mathematicians Claudia Fevola from Inria Saclay and Anna-Laura Sattelberger from the Max Planck Institute for Mathematics in the Sciences, recently published in the Notices of the American Mathematical Society.

Mathematics and physics share a close, reciprocal relationship. Mathematics offers the language and tools to describe physical phenomena, while physics drives the development of new mathematical ideas. This interplay remains vital in areas such as quantum field theory and cosmology, where advanced mathematical structures and physical theory evolve together.

In their article, the authors explore how algebraic structures and geometric shapes can help us understand phenomena ranging from particle collisions such as happens, for instance, in particle accelerators to the large-scale architecture of the cosmos. Their research is centered around algebraic geometry. Their recent undertakings also connect to a field called positive geometry—an interdisciplinary and novel subject in mathematics driven by new ideas in particle physics and cosmology.

“How quantum mechanics and gravity fit together is one of the most important outstanding problems in physics,” says Kathryn Zurek, a theoretical physicist at the California Institute of Technology (Caltech) in Pasadena.

Generations of researchers have tried to create a quantum theory of gravity, and their work has produced sophisticated mathematical constructs, such as string theory. But experimental physicists haven’t found concrete evidence for any of these, and they’re not even sure what such evidence could look like.

Now there is a sense that insights could be around the corner. In the past decade, many researchers have become more optimistic that there are ways to test the true nature of gravity in the laboratory. Scientists have proposed experiments to do this, and are pushing the precision of techniques to make them possible. “There’s been a huge rise in both experimental capability and our theoretical understanding of what we actually learn from such experiments,” says Markus Aspelmeyer, an experimental physicist at the University of Vienna and a pioneer of this work.

Animals like bats, whales and insects have long used acoustic signals for communication and navigation. Now, an international team of scientists has taken a page from nature’s playbook to model micro-sized robots that use sound waves to coordinate into large swarms that exhibit intelligent-like behavior.

The robot groups could one day carry out complex tasks like exploring disaster zones, cleaning up pollution, or performing medical treatments from inside the body, according to team lead Igor Aronson, Huck Chair Professor of Biomedical Engineering, Chemistry, and Mathematics at Penn State.

“Picture swarms of bees or midges,” Aronson said. “They move, that creates sound, and the sound keeps them cohesive, many individuals acting as one.”

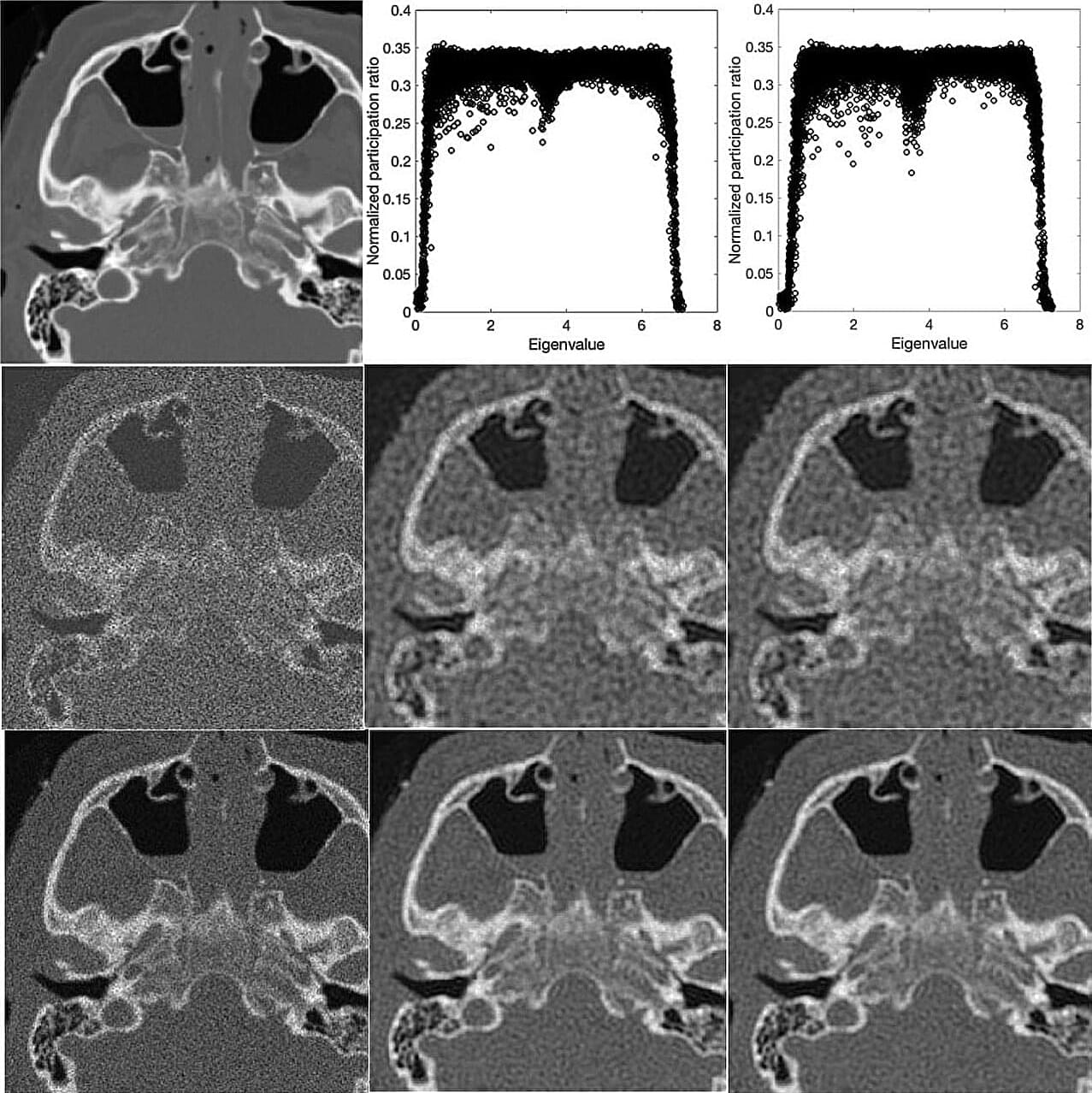

Medical imaging methods such as ultrasound and MRI are often affected by background noise, which can introduce blurring and obscure fine anatomical details in the images. For clinicians who depend on medical images, background noise is a fundamental problem in making accurate diagnoses.

Methods for denoising have been developed with some success, but they struggle with the complexity of noise patterns in medical images and require manual tuning of parameters, adding complexity to the denoising process.

To solve the denoising problem, some researchers have drawn inspiration from quantum mechanics, which describes how matter and energy behave at the atomic scale. Their studies draw an analogy between how particles vibrate and how pixel intensity spreads out in images and causes noise. Until now, none of these attempts directly applied the full-scale mathematics of quantum mechanics to image denoising.

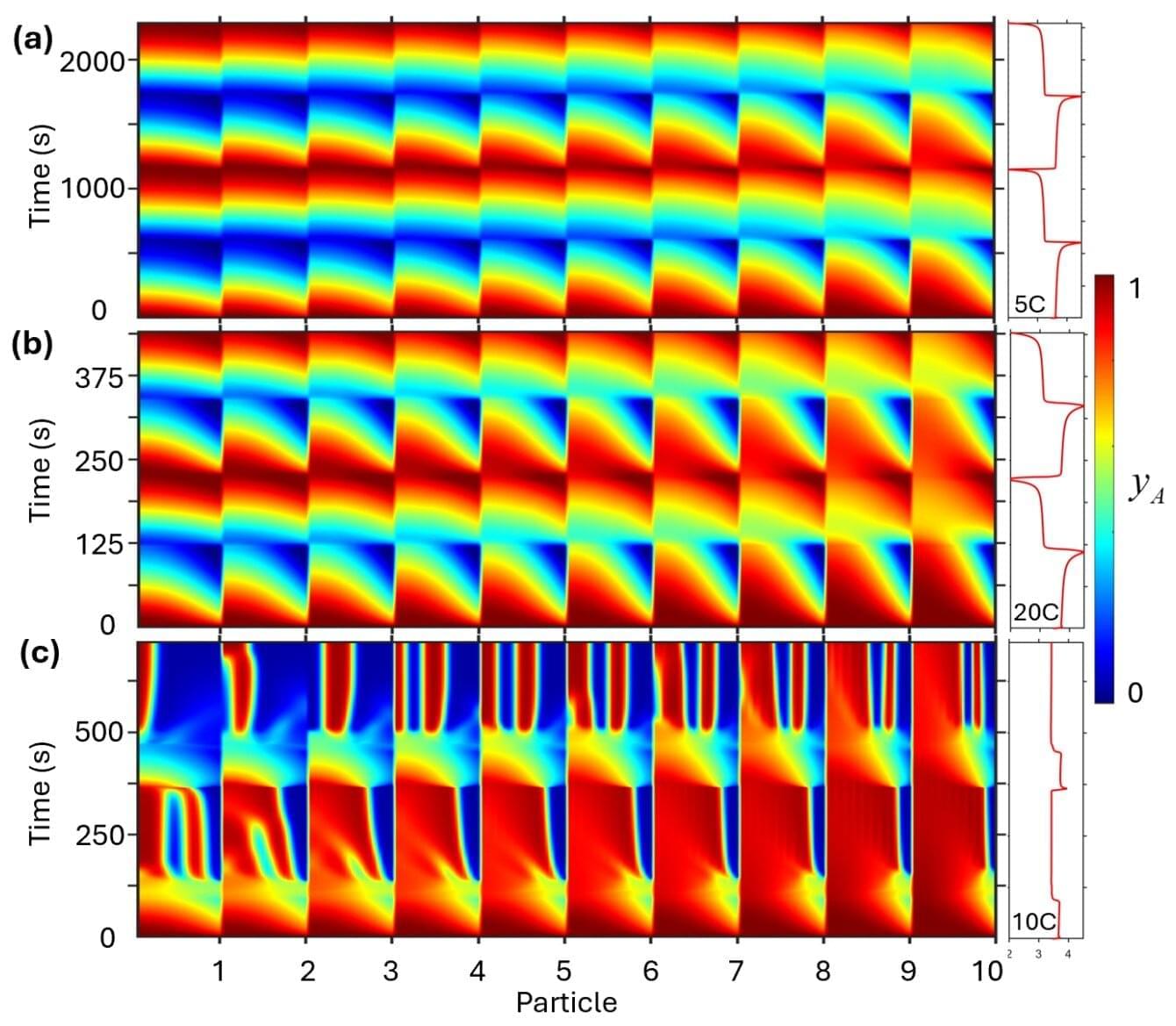

Engineers rely on computational tools to develop new energy storage technologies, which are critical for capitalizing on sustainable energy sources and powering electric vehicles and other devices. Researchers have now developed a new classical physics model that captures one of the most complex aspects of energy storage research—the dynamic nonequilibrium processes that throw chemical, mechanical and physical aspects of energy storage materials out of balance when they are charging or discharging energy.

The new Chen-Huang Nonequilibrium Phasex Transformation (NExT) Model was developed by Hongjiang Chen, a former Ph.D. student at NC State, in conjunction with his advisor, Hsiao-Ying Shadow Huang, who is an associate professor of mechanical and aerospace engineering at the university. A paper on the work, “Energy Change Pathways in Electrodes during Nonequilibrium Processes,” is published in The Journal of Physical Chemistry C.

But what are “nonequilibrium processes”? Why are they important? And why would you want to translate those processes into mathematical formulae? We talked with Huang to learn more.

A machine learning method developed by researchers from the Institute of Science Tokyo, the Institute of Statistical Mathematics, and other institutions accurately predicts liquid crystallinity of polymers with 96% accuracy. They screened over 115,000 polyimides and selected six candidates with a high probability of exhibiting liquid crystallinity. Upon successful synthesis and experimental analyses, these liquid crystalline polyimides demonstrated thermal conductivities up to 1.26 W m⁻¹ K⁻¹, accelerating the discovery of efficient thermal materials for next-generation electronics.

Finding new polymer materials that can efficiently dissipate heat while maintaining high reliability is one of the biggest challenges in modern electronics. One promising solution is liquid crystalline polyimides, a special class of polymers whose molecules naturally align into highly ordered structures.

These ordered chains create pathways for heat flow, making liquid crystalline polyimides highly attractive for thermal management in semiconductors, flexible displays, and next-generation devices. However, designing these polymers has long relied on trial and error because researchers lacked clear design rules to predict whether a polymer would form a liquid crystalline phase.

Imagine trying to make an accurate three-dimensional model of a building using only pictures taken from different angles—but you’re not sure where or how far away all the cameras were. Our big human brains can fill in a lot of those details, but computers have a much harder time doing so.

This scenario is a well-known problem in computer vision and robot navigation systems. Robots, for instance, must take in lots of 2D information and make 3D point clouds —collections of data points in 3D space—in order to interpret a scene. But the mathematics involved in this process is challenging and error-prone, with many ways for the computer to incorrectly estimate distances. It’s also slow, because it forces the computer to create its 3D point cloud bit by bit.

Computer scientists at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) think they have a better method: A breakthrough algorithm that lets computers reconstruct high-quality 3D scenes from 2D images much more quickly than existing methods.

Physicist and computer scientist Stephen Wolfram explores how simple rules can generate complex realities, offering a bold new vision of fundamental physics and the structure of the universe.

Stephen Wolfram is a British-American computer scientist, physicist, and businessman. He is known for his work in computer algebra and theoretical physics. In 2012, he was named a fellow of the American Mathematical Society. He is the founder and CEO of the software company Wolfram Research, where he works as chief designer of Mathematica and the Wolfram Alpha answer engine.

Watch more CTT Chats here: https://t.ly/jJI7e