Generally, it’s advised not to compare apples to oranges. However, in the field of topology, a branch of mathematics, this comparison is necessary. Apples and oranges, it turns out, are said to be topologically the same since they both lack a hole – in contrast to doughnuts or coffee cups, for instance, which both have one (the handle in the case of the cup) and, hence, are topologically equal.

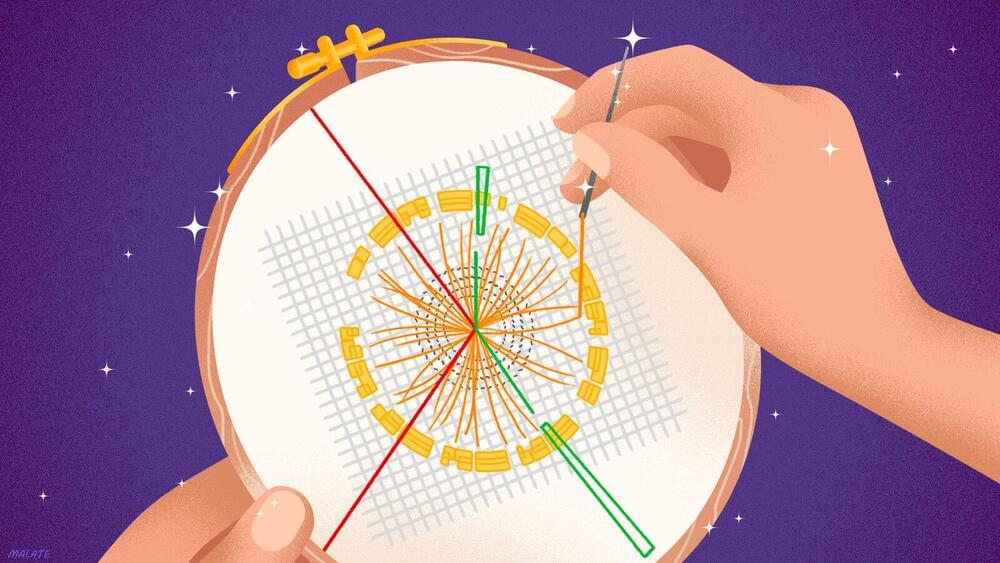

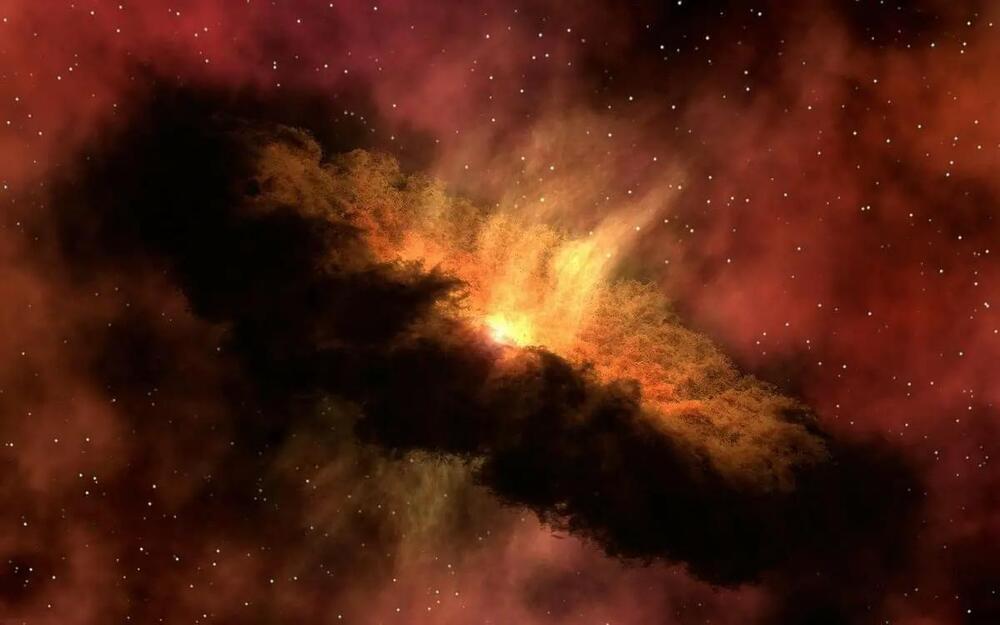

In a more abstract way, quantum systems in physics can also have a specific apple or doughnut topology, which manifests itself in the energy states and motion of particles. Researchers are very interested in such systems as their topology makes them robust against disorder and other disturbing influences, which are always present in natural physical systems.

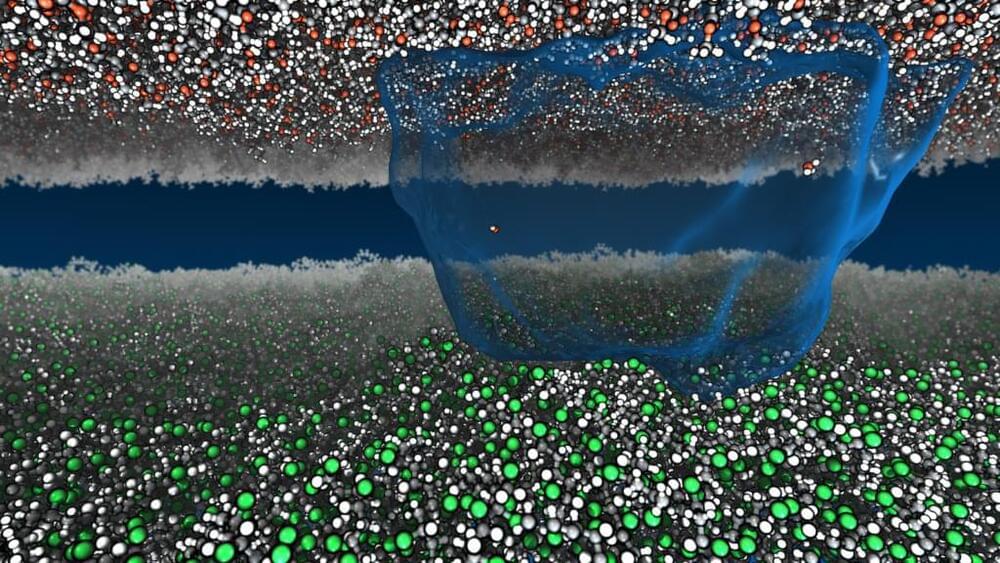

Things get particularly interesting if, in addition, the particles in such a system interact, meaning that they attract or repel each other, like electrons in solids. Studying topology and interactions together in solids, however, is extremely difficult. A team of researchers at ETH led by Tilman Esslinger has now managed to detect topological effects in an artificial solid, in which the interactions can be switched on or off using magnetic fields. Their results, which have just been published in the scientific journal Science, could be used in quantum technologies in the future.