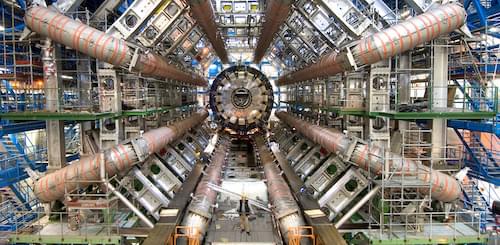

Skoltech scientists have devised a mathematical model of memory. By analyzing its new model, the team came to surprising conclusions that could prove useful for robot design, artificial intelligence, and for better understanding of human memory. Published in Scientific Reports, the study suggests there may be an optimal number of senses—if so, those of us with five senses could use a couple more.

“Our conclusion is, of course, highly speculative in application to human senses, although you never know: It could be that humans of the future would evolve a sense of radiation or magnetic field. But in any case, our findings may be of practical importance for robotics and the theory of artificial intelligence,” said study co-author Professor Nikolay Brilliantov of Skoltech AI.

“It appears that when each concept retained in memory is characterized in terms of seven features—as opposed to, say, five or eight—the number of distinct objects held in memory is maximized.”