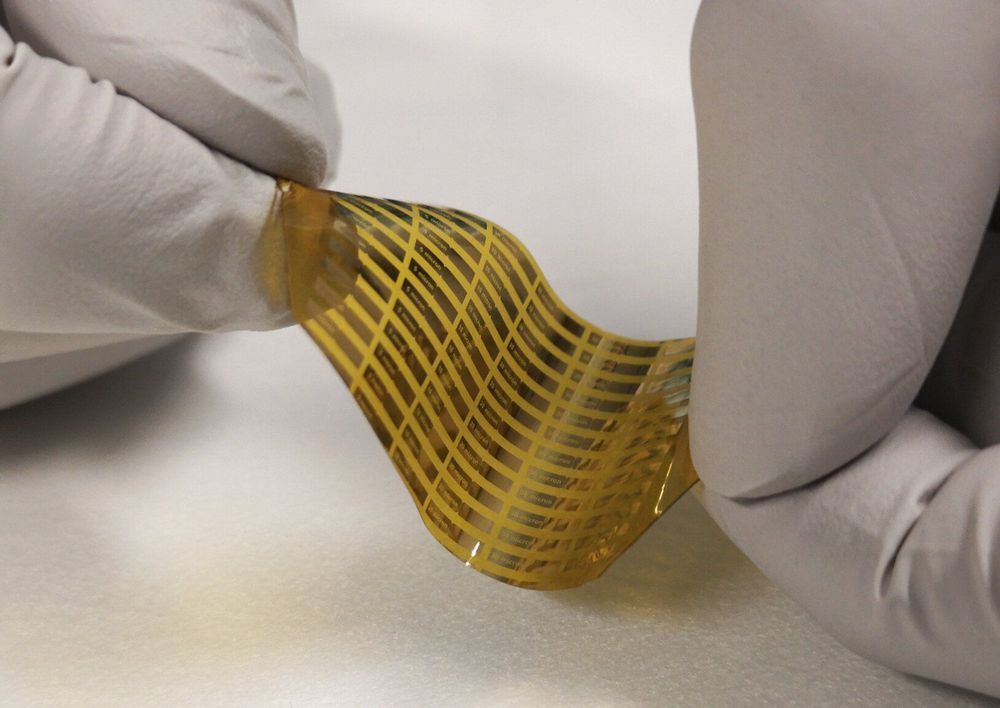

VTT researchers have successfully demonstrated a new electronic refrigeration technology that could enable major leaps in the development of quantum computers. Present quantum computers require extremely complicated and large cooling infrastructure that is based on mixture of isotopes of helium. The new electronic cooling technology could replace these cryogenic liquid mixtures and enable miniaturization of quantum computers.

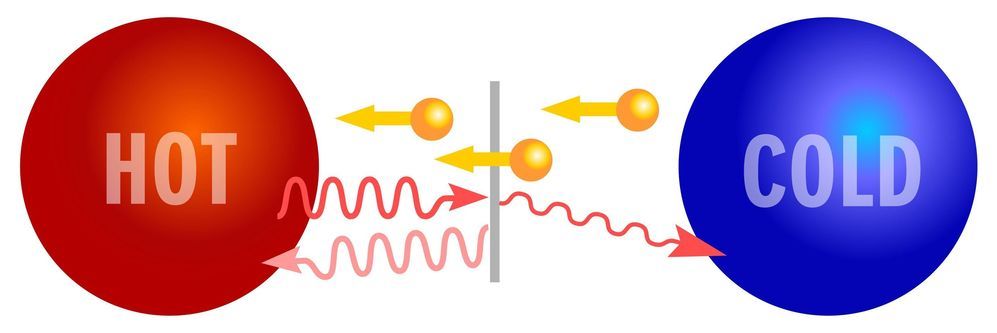

In this purely electrical refrigeration method, cooling and thermal isolation operate effectively through the same point like junction. In the experiment the researchers suspended a piece of silicon from such junctions and refrigerated the object by feeding electrical current from one junction to another through the piece. The current lowered the thermodynamic temperature of the silicon object as much as 40% from that of the surroundings. This could lead to the miniaturization of future quantum computers, as it can simplify the required cooling infrastructure significantly. The discovery has been published in Science Advances.

“We expect that this newly discovered electronic cooling method could be used in several applications from the miniaturization of quantum computers to ultra-sensitive radiation sensors of the security field,” says Research Professor Mika Prunnila from VTT Technical Research Centre of Finland.