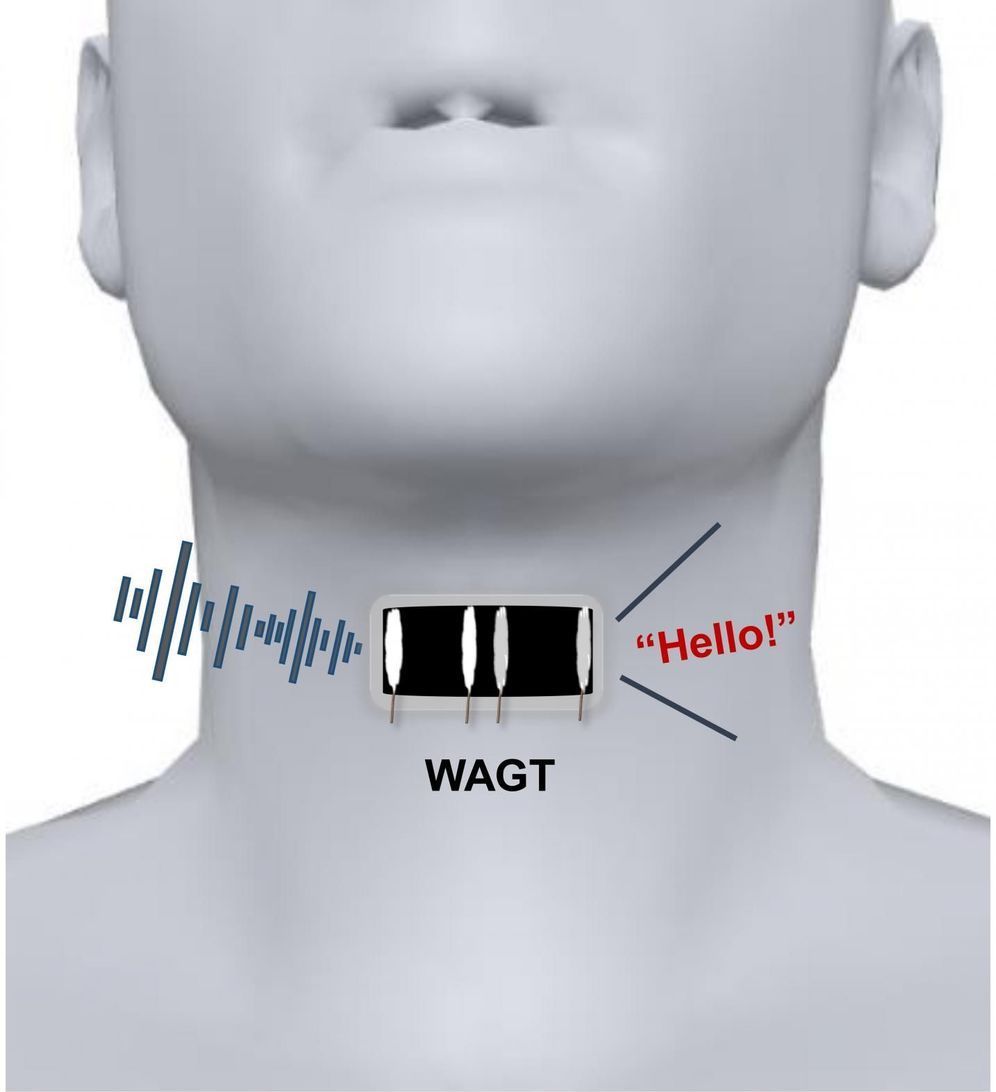

New York, NY—August 12, 2019—A novel neck brace, which supports the neck during its natural motion, was designed by Columbia engineers. This is the first device shown to dramatically assist patients suffering from Amyotrophic Lateral Sclerosis (ALS) in holding their heads and actively supporting them during range of motion. This advance would result in improved quality of life for patients, not only in improving eye contact during conversation, but also in facilitating the use of eyes as a joystick to control movements on a computer, much as scientist Stephen Hawkins famously did.

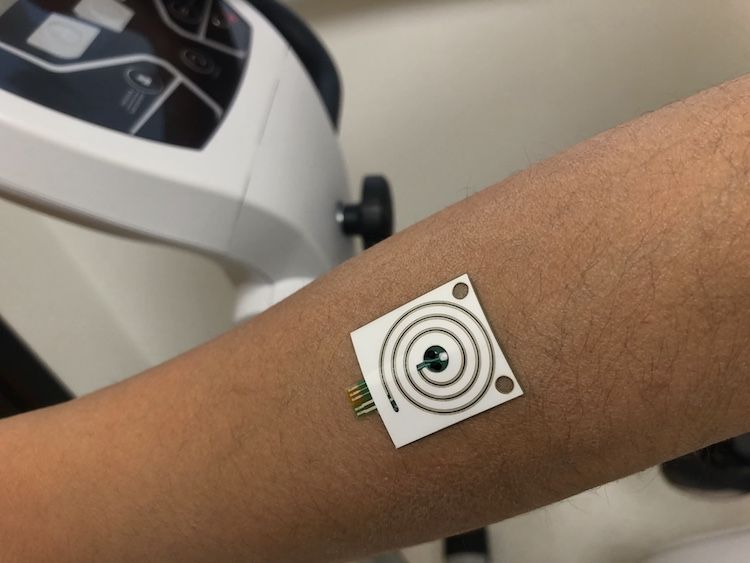

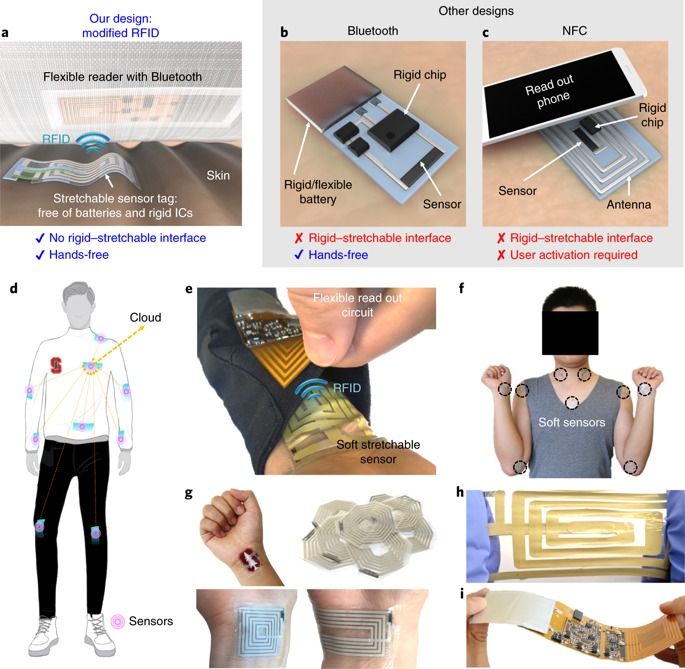

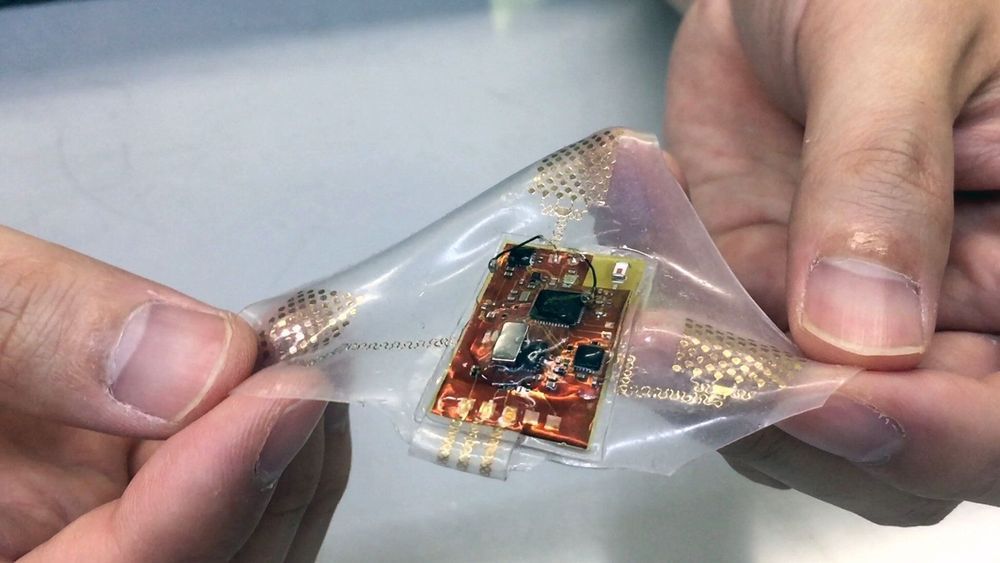

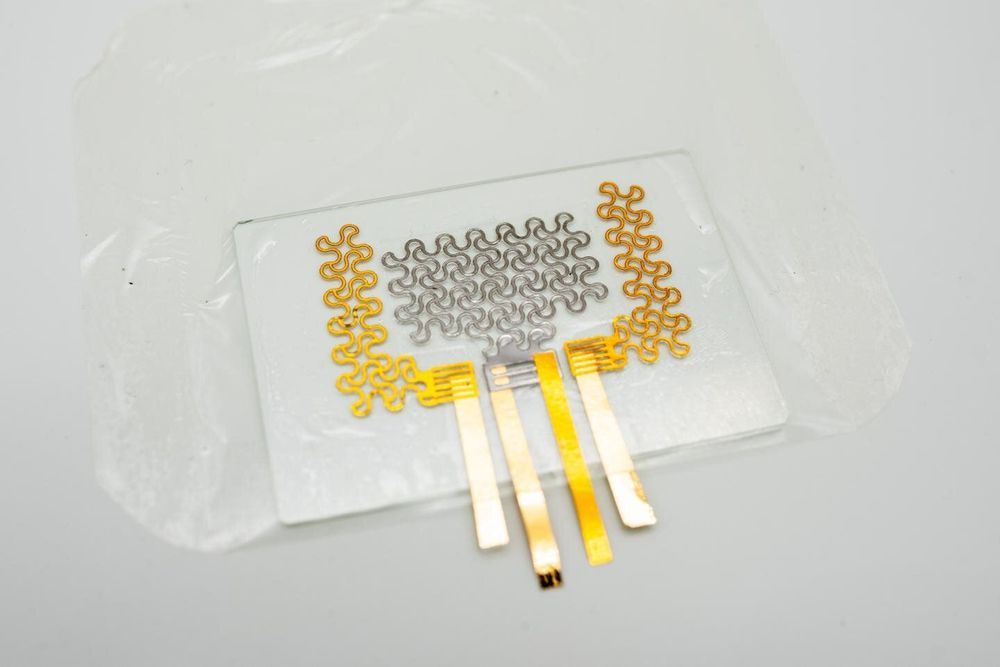

A team of engineers and neurologists led by Sunil Agrawal, professor of mechanical engineering and of rehabilitation and regenerative medicine, designed a comfortable and wearable robotic neck brace that incorporates both sensors and actuators to adjust the head posture, restoring roughly 70% of the active range of motion of the human head. Using simultaneous measurement of the motion with sensors on the neck brace and surface electromyography (EMG) of the neck muscles, it also becomes a new diagnostic tool for impaired motion of the head-neck. Their pilot study was published August 7 in the Annals of Clinical and Translational Neurology.

The brace also shows promise for clinical use beyond ALS, according to Agrawal, who directs the Robotics and Rehabilitation (ROAR) Laborator y. “The brace would also be useful to modulate rehabilitation for those who have suffered whiplash neck injuries from car accidents or have from poor neck control because of neurological diseases such as cerebral palsy,” he said.